AI in Warfare: The Ethical Dilemma of Letting Machines Decide

18 November 2025

We’re standing on the edge of a battlefield where the trigger isn’t pulled by a human hand anymore—it's executed by lines of code. AI in warfare isn't science fiction anymore; it's real, it’s here, and it’s raising more questions than we have answers for. Imagine drones choosing targets without human input or autonomous robots deciding who lives or dies. Sounds like something out of a dystopian movie, right? Sadly, it’s not fiction anymore.

Let’s pull apart this beast and look at it from every angle—ethics, law, control, and what it really means to let machines make life-and-death decisions.

The Rise of Killer Algorithms

Artificial Intelligence has been transforming industries for years, but war? That hits differently. AI in warfare isn’t just about efficiency—it’s about power, dominance, and control. We're not just talking surveillance drones or automated logistics. Nope. We're talking about fully autonomous systems capable of making strategic decisions on the battlefield.These machines, often called Lethal Autonomous Weapon Systems (LAWS), are designed to identify, engage, and neutralize threats without human intervention. Sounds efficient, right? Maybe too efficient.

Who’s Actually in Control Here?

Let’s be honest—once machines gain autonomy, control becomes a slippery slope. If an AI-controlled drone makes a mistake and kills civilians, who’s accountable? The programmer? The military commander? The robot itself? Spoiler alert: machines aren’t held responsible. People are. But which people? That’s where it gets murky.Think about it: if you hand a gun to a robot and program it to shoot when threatened, and it misidentifies a kid holding a toy as a threat—who’s at fault? The one who built it? The soldier who deployed it? Or the AI itself? These are the messy, gut-wrenching questions that no one seems ready to answer.

Ethics: Not Just a Buzzword Anymore

Let’s talk ethics, because that’s where the real storm brews. The idea of machines taking lethal actions without human judgment is deeply disturbing. Why? Because ethics isn’t programmable. It’s contextual, emotional, and human.Humans have the ability to hesitate, to feel remorse, to reconsider. Machines, driven by algorithms and datasets, don’t. They don’t understand the value of human life—they just follow code. Would you trust a machine to determine whether someone deserves to live or die based on patterns and probabilities?

A chilling thought, right?

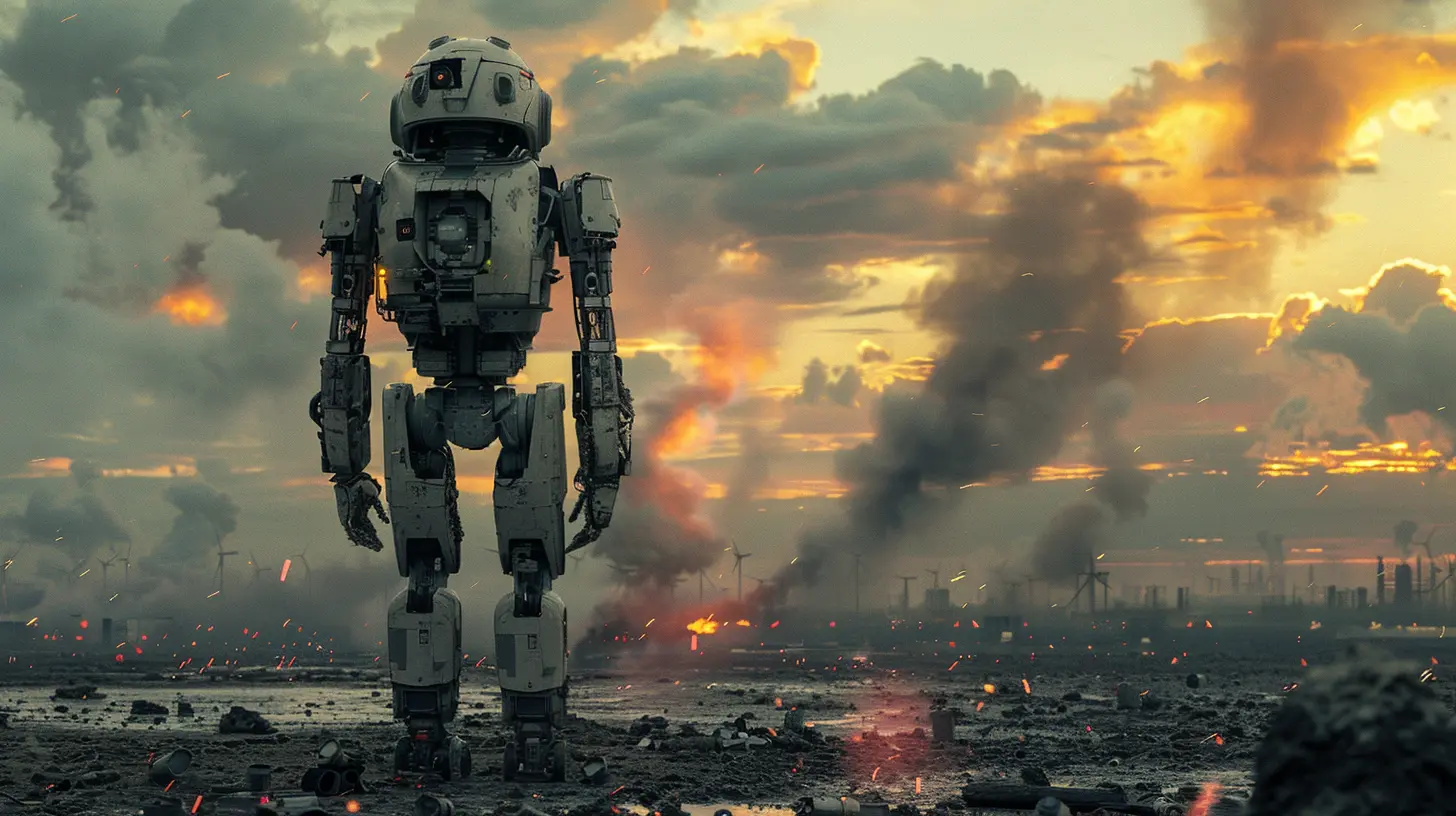

War Without Humanity

War has always been a brutal affair. But there’s a difference between brutality carried out by humans and killing done by cold, emotionless algorithms. Removing the human element from combat makes it eerily easy to wage war. It’s sanitized. It’s distant. And that’s dangerous.Imagine a country deploying autonomous drones at the press of a button, launching attacks without ever risking human soldiers. Suddenly, the cost of war drops—not financially, but ethically. If no soldiers die, leaders might be more willing to initiate conflict. That’s a nightmare scenario.

The AI Arms Race: Who’s Gonna Blink First?

Guess what? The race is already on. The US, China, Russia, and others are pouring billions into AI military technologies. And let’s be real—no one wants to fall behind. It's like a high-stakes tech version of "keeping up with the Joneses," but with nukes and killer bots.The irony? Everyone’s racing to build smarter AI weapons while simultaneously warning about their potential dangers. It’s like selling cigarettes while funding anti-smoking campaigns. Hypocrisy much?

Bias in the Battlefield? You Bet

AI is only as good as the data it's trained on. And guess what? That data is often biased. If your database mostly labels certain appearances as threats, your machine will follow suit. That means racial, gender, and cultural biases can creep into life-or-death decisions.Think of it this way—if facial recognition software can’t reliably tell people of color apart in civilian use, what makes us think it’ll do better in the heat of combat? One algorithmic hiccup could result in tragedy.

Can a Machine Understand Proportionality?

International humanitarian law talks a lot about proportionality—making sure that the military gain from an attack outweighs the civilian harm. That’s a nuanced calculation that even humans struggle with.Now ask yourself: can a robot make that judgment? Can an AI system assess complex, unpredictable battlefield situations and decide whether collateral damage is acceptable? Short answer: not yet. Maybe not ever.

The Legal Black Hole

Here’s the fun (read: not fun) part—there are almost no international laws explicitly governing the use of AI in warfare. That’s right. We’re letting countries experiment with killer robots without a global rulebook.Sure, there are treaties like the Geneva Conventions, but those were written long before anyone imagined robots making kill decisions. We’re flying blind here, folks.

And even if regulations are created, good luck enforcing them. How do you audit an autonomous drone’s decision-making process after the fact? It’s like trying to understand a magician’s trick without seeing the setup.

Moral Offloading: Don’t Blame Me, It Was the Bot

Another dangerous side effect of AI in warfare? Moral offloading. When things go wrong (and they will), it’s easy to point at the machine and shrug. “It wasn’t me—it was the algorithm.” That’s a cop-out, plain and simple.Removing human oversight doesn’t remove responsibility—it just muddies it. Leaders, developers, and commanders must still be held accountable. We can’t let AI become a scapegoat.

Autonomy Doesn’t Equal Superiority

Let’s squash a myth right now: autonomous doesn’t mean better. Sure, AI can process data faster than any human. But war isn't just black and white. It's layered, unpredictable, and emotional. Machines lack context, empathy, and intuitive judgment.They’re tools—nothing more. Giving them control is like letting a calculator decide whether to detonate a bomb. It might be fast, but is it wise?

The People Pushing Back

Thankfully, not everyone’s on board with this AI arms race. Human rights organizations, ethicists, even some tech companies are calling for bans on fully autonomous weapons.Movements like the Campaign to Stop Killer Robots (yeah, that’s a real thing) are gaining traction. The goal? Establish international norms before it’s too late. Because once Pandora’s box is open, good luck closing it.

Is There a Middle Ground?

Some argue that AI can be used ethically in support roles—like assisting with reconnaissance, logistics, or cybersecurity—without taking lethal autonomy too far. It’s a delicate balance: leveraging AI’s power without surrendering moral agency.But here’s the catch: once the tech exists, the temptation to go further will be overwhelming. It’s the same slippery slope that turned surveillance tools into mass-spying systems. Today it’s assistance. Tomorrow? Autonomy.

Final Thoughts: Just Because We Can, Should We?

Here’s the million-dollar question: just because we can build autonomous killing machines, should we?Technology isn’t inherently evil. But without boundaries, we risk creating a future where wars are fought by emotionless machines, dictated by algorithms, and justified by convenience. That’s not progress. That’s a loss of humanity.

If we truly care about ethics, international stability, and the sanctity of life, then we need to put the brakes on. Not tomorrow. Not next year. Right now.

We have a choice. Let’s not sleepwalk our way into a machine-driven battlefield with no way back.

all images in this post were generated using AI tools

Category:

Ai EthicsAuthor:

Marcus Gray

Discussion

rate this article

1 comments

Bethany McNeil

This article raises crucial ethical concerns about AI in warfare, highlighting the need for careful oversight and regulation.

November 19, 2025 at 11:26 AM

Marcus Gray

Thank you for your insightful comment! I agree that careful oversight and regulation are essential to address the ethical dilemmas posed by AI in warfare.